Whitepapers

How to Deploy Microsoft Fabric in Multicloud Infrastructures

Microsoft Fabric’s data analytics combined with the power of the multi-cloud architecture, drives decision making and empowers users.

1. Introduction

Microsoft Fabric enables effective data management, which is no longer an option for organizations, but an obligation. It is a critical element to make informed decisions and lead in an increasingly demanding and competitive business environment.

Combining data analytics with multi-cloud infrastructure becomes key to collect, process and visualize data across multiple cloud platforms.

This guide explores step-by-step how Microsoft Fabric can help organizations make the most of their data in a distributed environment. Join us!

2. What is Microsoft Fabric?

Microsoft Fabric is an all-in-one analytics platform that spans the entire data lifecycle. From data input and transformation, to storage.

It includes powerful data science, real-time analytics and business intelligence capabilities. It integrates all these functionalities in one repository and eliminates the need to rely on multiple providers.

In addition, it stands out for its low code/no code user interface: so even business users with no programming experience can take advantage of it. This empowers business teams and maximizes efficiency in the use of data.

3. Advantages of using Microsoft Fabric in a multi-cloud infrastructure

Deploying Microsoft Fabric in a multi-cloud infrastructure offers significant advantages:

– It unifies data management in a single solution, simplifying operations, improving decision making and empowering business users.

– As a result, users achieve greater operational efficiency, more agility to adapt to changing needs, and higher levels of security and compliance.

– At the same time, it leverages the specific capabilities of multiple cloud providers.

4. The implementation process step-by-step

4.1 Objectives and scope

The definition of project objectives and its scope is a fundamental step in the implementation of any initiative. It involves an identification of the expected achievements and limits of the project.

What data needs to be moved? How often is it updated? What systems depend on it? The answers to these questions provide valuable context.

Consideration should be given to issues related to the allocated budget, anticipated benefits, and assessment of potential risks inherent in the implementation.

It is also crucial to ensure that all stakeholders understand the objectives and boundaries of the project. Transparent communication and effective alignment with stakeholders ensure the necessary support throughout the process.

4.2 Prerequisites and initial configuration

In Microsoft Fabric there is no need to deal with complex initial configurations. With just a few clicks users can start taking full advantage of this powerful data analysis and management tool.

To enable Microsoft Fabric’s preview features, certain prerequisites must be met:

– Have an organizational level tenant in the Microsoft environment.

– Have an administrator role in Microsoft 365, Power Platform or Fabric.

Then, the initial configuration process is straightforward. Simply access the tenant settings and enable Microsoft Fabric for the entire organization or for a specific group of users.

4.3. Identification of data sources

This process allows users to understand the complexity of the data to be transferred between different cloud environments and to ensure its successful migration.

The key steps to perform this task effectively are:

– Data source inventory: includes all data sources in the current infrastructure: databases, file systems, applications, web services and any other data repositories.

– Data classification: this is done based on the data importance and criticality to the business. Some may be essential, while others may be less relevant. This classification will help prioritize the migration.

– Dependency analysis: identifies dependencies between different data sets and applications. Some may be interdependent and should be migrated together to avoid consistency issues.

– Data quality assessment: performed on each source and includes data accuracy, completeness and consistency. Poor quality data may require cleaning prior to migration.

– Data mapping: creates a detailed map indicating where data comes from, how it is used and where it should be located in the target multicloud environment. This includes defining data structures, formats and schemas.

4.4. Data intake and data preparation

In the world of data and analytics, data intake and data preparation are the foundation on which informed business decisions are built.

In this context, Microsoft Fabric offers two powerful tools – Pipelines and Dataflows – that simplify these crucial processes and deliver exceptional benefits by unifying data from multiple clouds.

Both allow information to be brought in from different clouds (Azure, AWS, GCP, among others) with native plug-ins that are configured with a few basic parameters. Its simplicity makes data input accessible even to non-technical users.

Pipelines is a complete and versatile solution for data ingestion. Its ability to connect multiple sources – from local databases to clouds and third-party applications – makes it the perfect partner for consolidating dispersed data.

Automating intake and scheduling it at regular intervals is an invaluable advantage. Data can be extracted and transformed in real time or according to a predefined schedule, with the certainty that it is always up-to-date and ready for analysis.

Power BI Dataflow, on the other hand, facilitates the preparation of data in an agile and powerful way for the transformation stage. It allows users to create ETL (Extract, Transform, Load) processes in an intuitive drag-and-drop interface. It is also possible to apply filters, clean data, perform aggregations and combine multiple sources without the need for complex coding.

One of the most outstanding advantages of Dataflow is its reusability. The transformation workflows created can be shared and used in various Power BI reports and dashboards. This saves time and ensures consistency in analysis.

4.5. Selecting the right storage

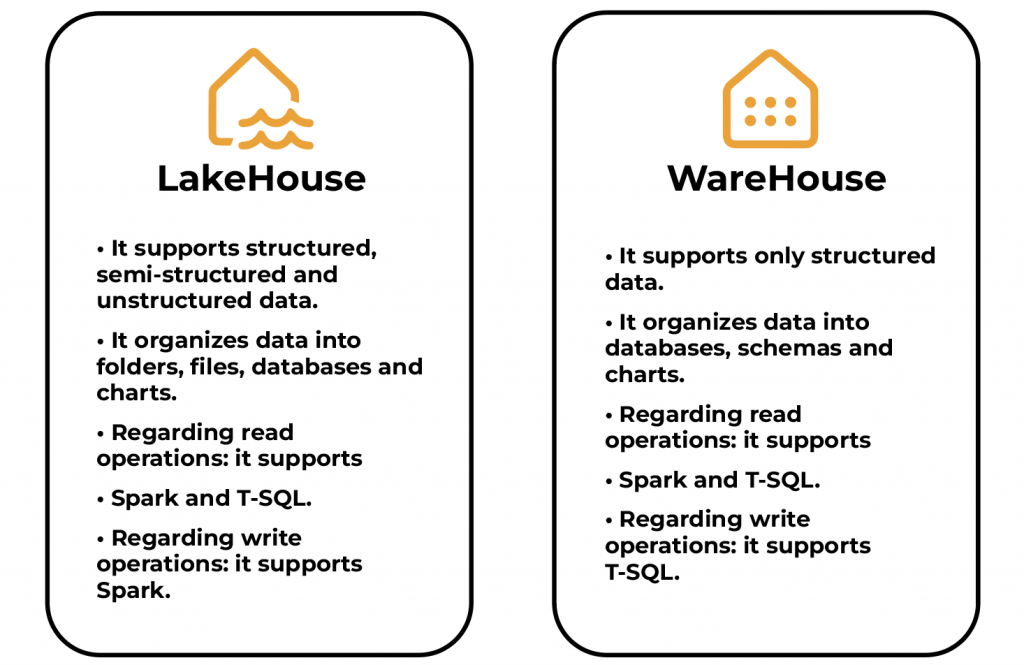

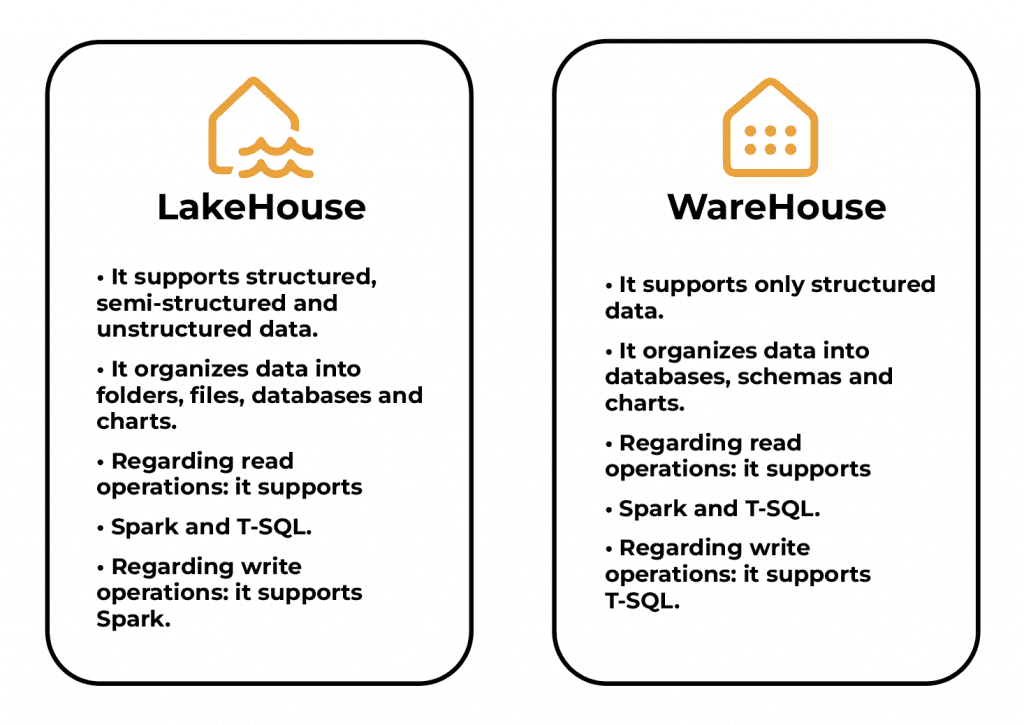

Depending on the initial requirements, the type of data and the queries to be performed, users can choose between a Data Warehouse or a Lakehouse:

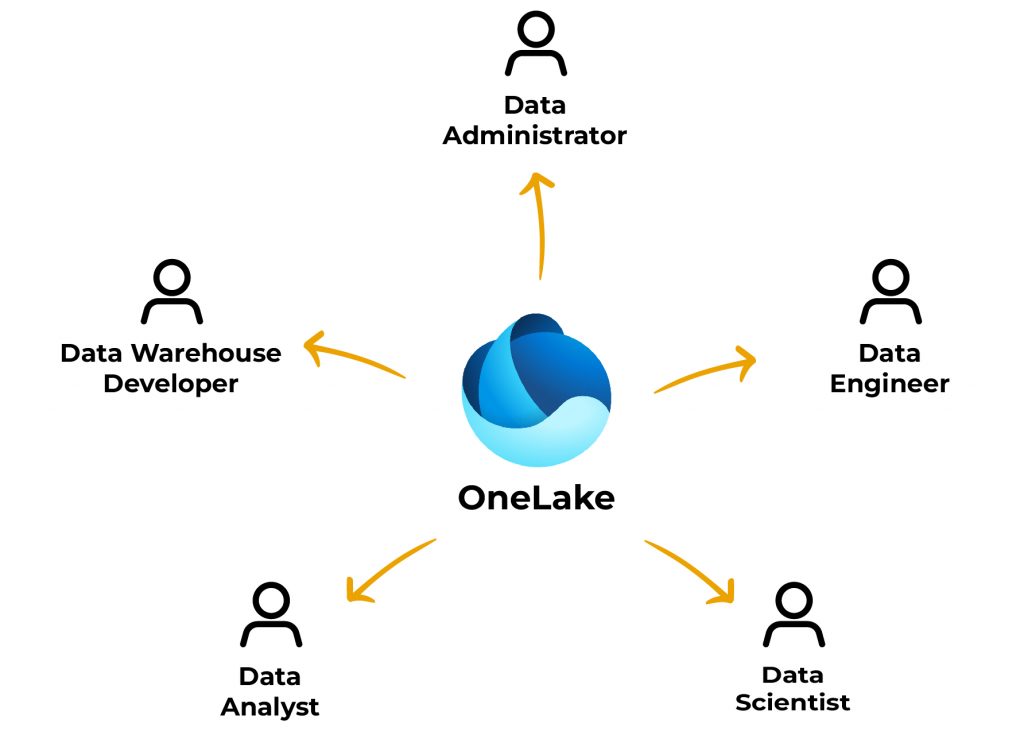

The solid foundations of enterprise data have already been laid and unified in Microsoft Fabric’s OneLake. The doors are now open to more ambitious challenges of data analytics, machine learning models and much more.

4.6 Collaborative development in a single environment

In addition to simplifying data management, Microsoft Fabric encourages collaboration. In this single centralized environment, multidisciplinary teams can work together more efficiently and effectively.

Data analysts, data scientists, developers and business experts access the same data and tools, accelerating the development process.

This means users can create smarter and more agile solutions that adapt to the changing business needs.

5. Conclusions

Microsoft Fabric in a multi-cloud infrastructure:

- Enables simpler integrations at lower costs.

- Decreases the multitude of vendors and tools.

- Provides an end-to-end solution that unlocks the power of data.

- Requires no data movement and eliminates the need to duplicate data.

- Provides a unified SaaS environment with a single pricing structure. This promotes transparency and facilitates consumption analysis.

- Incorporates security and data governance.

Although Fabric is a recently launched product, Microsoft announced that among its plans is the idea of creating migration paths that streamline the migration of data architectures made in Azure to the Fabric ecosystem.

We will have to wait a little longer to benefit from this upcoming feature, but one thing is for sure: in the future, the benefits will enhanced.

Ready to transform your Microsoft Fabric operations into a multi-cloud infrastructure? Contact us to get personalized advice and start your journey towards more efficient data management. Schedule your meeting today!

Tech Guide: How to Deploy Microsoft Fabric in Multicloud Infrastructures

- Introduction

Microsoft Fabric enables effective data management, which is no longer an option for organizations, but an obligation. It is a critical element to make informed decisions and lead in an increasingly demanding and competitive business environment.

Combining data analytics with multi-cloud infrastructure becomes key to collect, process and visualize data across multiple cloud platforms.

This guide explores step-by-step how Microsoft Fabric can help organizations make the most of their data in a distributed environment. Join us!

- What is Microsoft Fabric

Microsoft Fabric is an all-in-one analytics platform that spans the entire data lifecycle. From data input and transformation, to storage.

It includes powerful data science, real-time analytics and business intelligence capabilities. It integrates all these functionalities in one repository and eliminates the need to rely on multiple providers.

In addition, it stands out for its low code/no code user interface: so even business users with no programming experience can take advantage of it. This empowers business teams and maximizes efficiency in the use of data.

- Advantages of using Microsoft Fabric in a multi-cloud infrastructure

Deploying Microsoft Fabric in a multi-cloud infrastructure offers significant advantages:

– It unifies data management in a single solution, simplifying operations, improving decision making and empowering business users.

– As a result, users achieve greater operational efficiency, more agility to adapt to changing needs, and higher levels of security and compliance.

– At the same time, it leverages the specific capabilities of multiple cloud providers.

- The implementation process step-by-step

4.1 Objectives and scope

The definition of project objectives and its scope is a fundamental step in the implementation of any initiative. It involves an identification of the expected achievements and limits of the project.

What data needs to be moved? How often is it updated? What systems depend on it? The answers to these questions provide valuable context.

Consideration should be given to issues related to the allocated budget, anticipated benefits, and assessment of potential risks inherent in the implementation.

It is also crucial to ensure that all stakeholders understand the objectives and boundaries of the project. Transparent communication and effective alignment with stakeholders ensure the necessary support throughout the process.

4.2 Prerequisites and initial configuration

In Microsoft Fabric there is no need to deal with complex initial configurations. With just a few clicks users can start taking full advantage of this powerful data analysis and management tool.

To enable Microsoft Fabric’s preview features, certain prerequisites must be met:

– Have an organizational level tenant in the Microsoft environment.

– Have an administrator role in Microsoft 365, Power Platform or Fabric.

Then, the initial configuration process is straightforward. Simply access the tenant settings and enable Microsoft Fabric for the entire organization or for a specific group of users.

4.3. Identification of data sources

This process allows users to understand the complexity of the data to be transferred between different cloud environments and to ensure its successful migration.

The key steps to perform this task effectively are:

– Data source inventory: includes all data sources in the current infrastructure: databases, file systems, applications, web services and any other data repositories.

– Data classification: this is done based on the data importance and criticality to the business. Some may be essential, while others may be less relevant. This classification will help prioritize the migration.

– Dependency analysis: identifies dependencies between different data sets and applications. Some may be interdependent and should be migrated together to avoid consistency issues.

– Data quality assessment: performed on each source and includes data accuracy, completeness and consistency. Poor quality data may require cleaning prior to migration.

– Data mapping: creates a detailed map indicating where data comes from, how it is used and where it should be located in the target multicloud environment. This includes defining data structures, formats and schemas.

4.4. Data intake and data preparation

In the world of data and analytics, data intake and data preparation are the foundation on which informed business decisions are built.

In this context, Microsoft Fabric offers two powerful tools – Pipelines and Dataflows – that simplify these crucial processes and deliver exceptional benefits by unifying data from multiple clouds.

Both allow information to be brought in from different clouds (Azure, AWS, GCP, among others) with native plug-ins that are configured with a few basic parameters. Its simplicity makes data input accessible even to non-technical users.

Pipelines is a complete and versatile solution for data ingestion. Its ability to connect multiple sources – from local databases to clouds and third-party applications – makes it the perfect partner for consolidating dispersed data.

Automating intake and scheduling it at regular intervals is an invaluable advantage. Data can be extracted and transformed in real time or according to a predefined schedule, with the certainty that it is always up-to-date and ready for analysis.

Power BI Dataflow, on the other hand, facilitates the preparation of data in an agile and powerful way for the transformation stage. It allows users to create ETL (Extract, Transform, Load) processes in an intuitive drag-and-drop interface. It is also possible to apply filters, clean data, perform aggregations and combine multiple sources without the need for complex coding.

One of the most outstanding advantages of Dataflow is its reusability. The transformation workflows created can be shared and used in various Power BI reports and dashboards. This saves time and ensures consistency in analysis.

4.5. Selecting the right storage

Depending on the initial requirements, the type of data and the queries to be performed, users can choose between a Data Warehouse or a Lakehouse:

The solid foundations of enterprise data have already been laid and unified in Microsoft Fabric’s OneLake. The doors are now open to more ambitious challenges of data analytics, machine learning models and much more.

4.6 Collaborative development in a single environment

In addition to simplifying data management, Microsoft Fabric encourages collaboration. In this single centralized environment, multidisciplinary teams can work together more efficiently and effectively.

Data analysts, data scientists, developers and business experts access the same data and tools, accelerating the development process.

This means users can create smarter and more agile solutions that adapt to the changing business needs.

- Conclusions

Microsoft Fabric in a multi-cloud infrastructure:

- Enables simpler integrations at lower costs.

- Decreases the multitude of vendors and tools.

- Provides an end-to-end solution that unlocks the power of data.

- Requires no data movement and eliminates the need to duplicate data.

- Provides a unified SaaS environment with a single pricing structure. This promotes transparency and facilitates consumption analysis.

- Incorporates security and data governance.

Although Fabric is a recently launched product, Microsoft announced that among its plans is the idea of creating migration paths that streamline the migration of data architectures made in Azure to the Fabric ecosystem.

We will have to wait a little longer to benefit from this upcoming feature, but one thing is for sure: in the future, the benefits will enhanced.

Ready to transform your Microsoft Fabric operations into a multi-cloud infrastructure? Contact us to get personalized advice and start your journey towards more efficient data management. Schedule your meeting today!